Papers Under Review

|

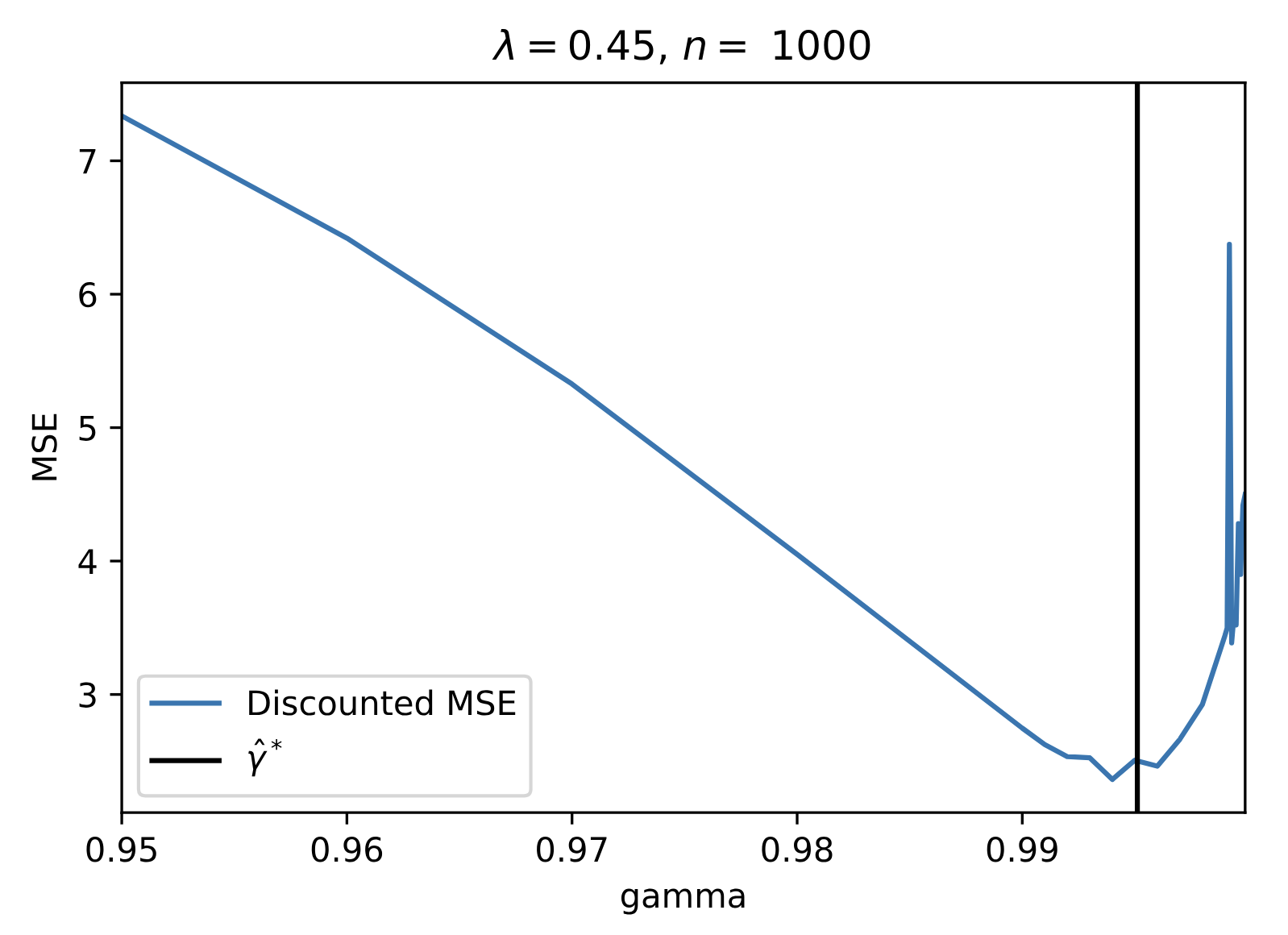

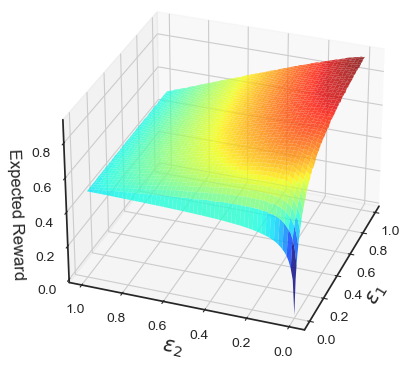

Optimization of Epsilon-Greedy Exploration |

|

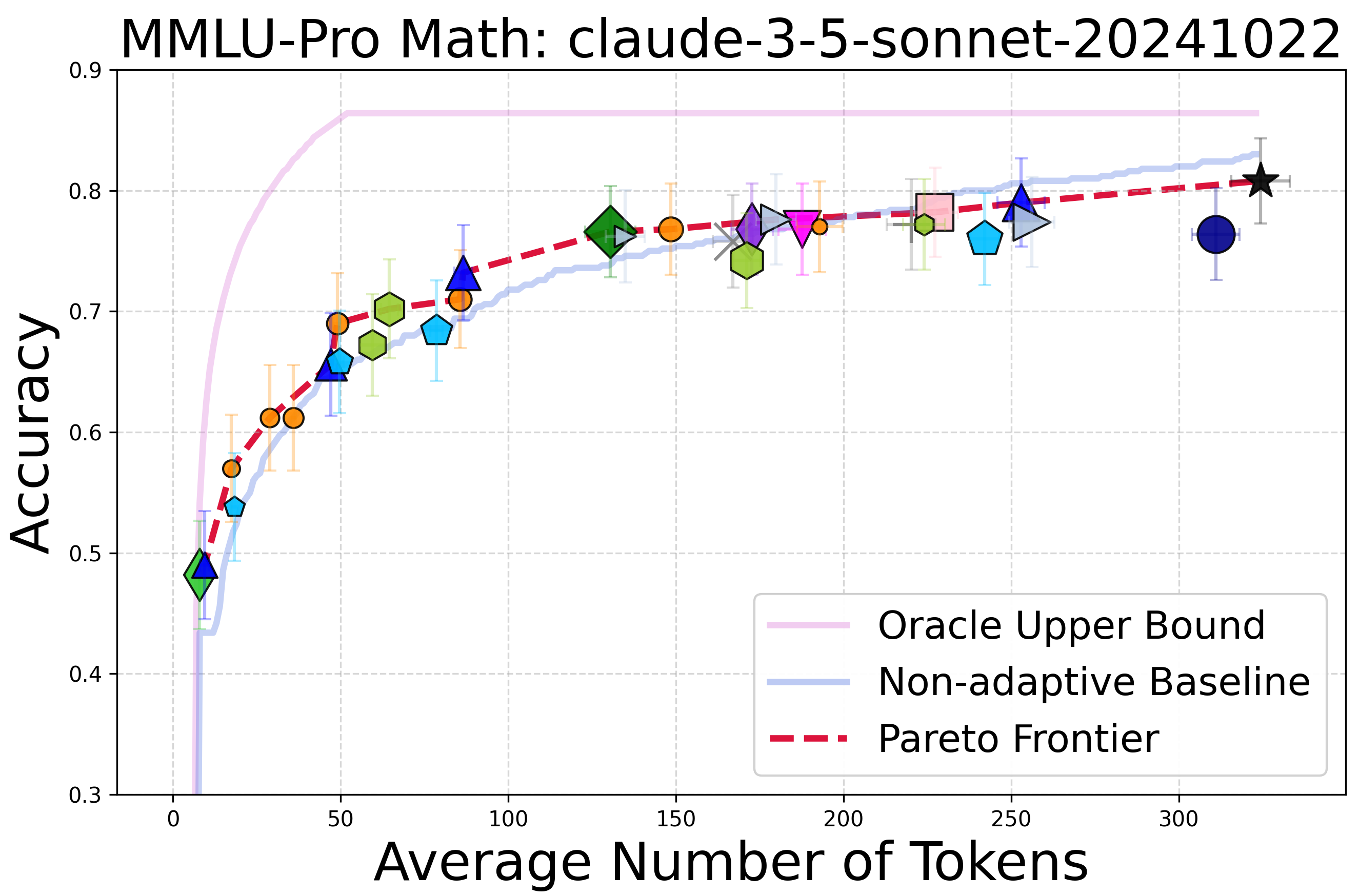

How Well do LLMs Compress Their Own Chain-of-Thought? |

|

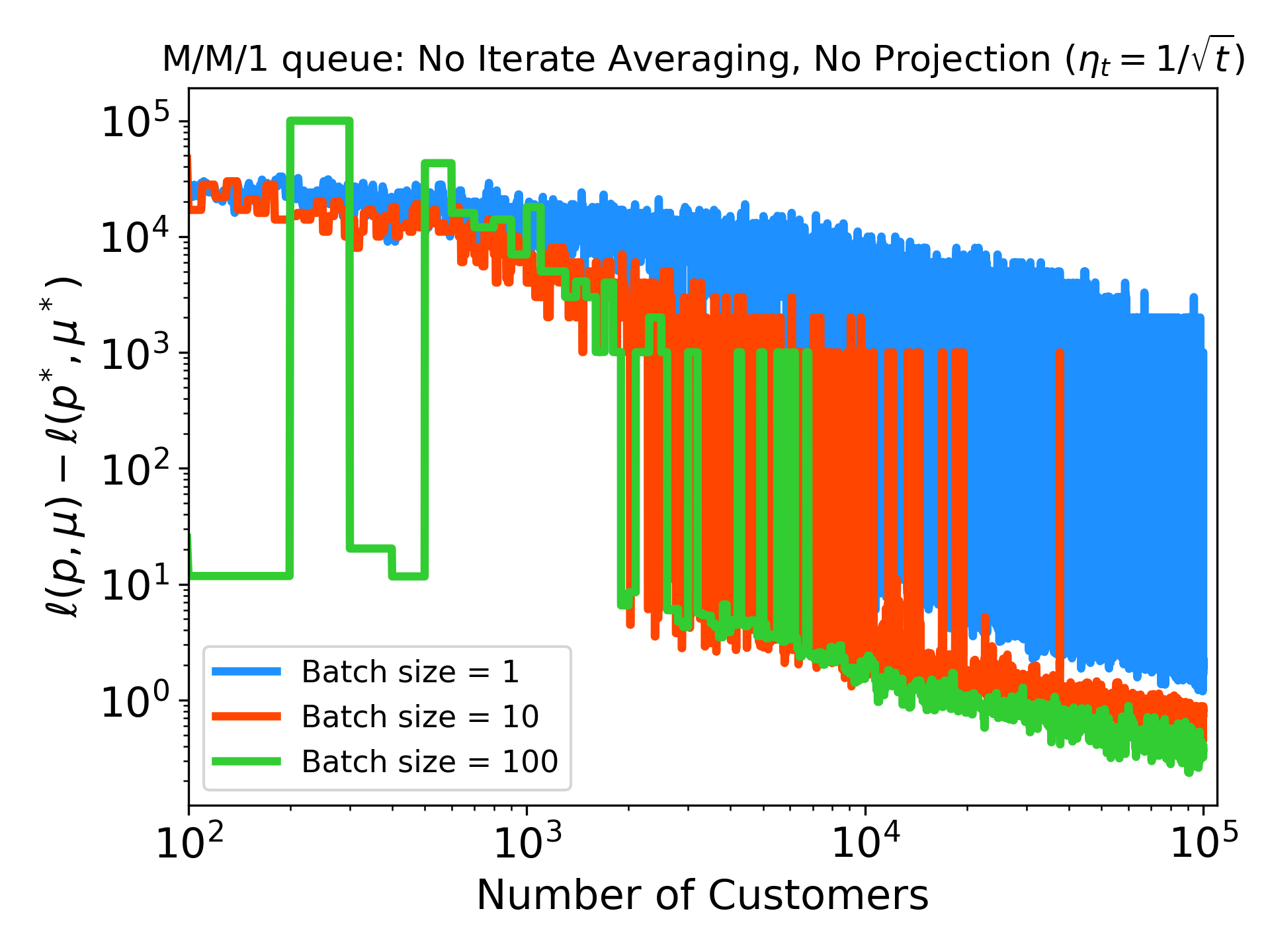

Differentiable Discrete Event Simulation for Queuing Network Control |

|

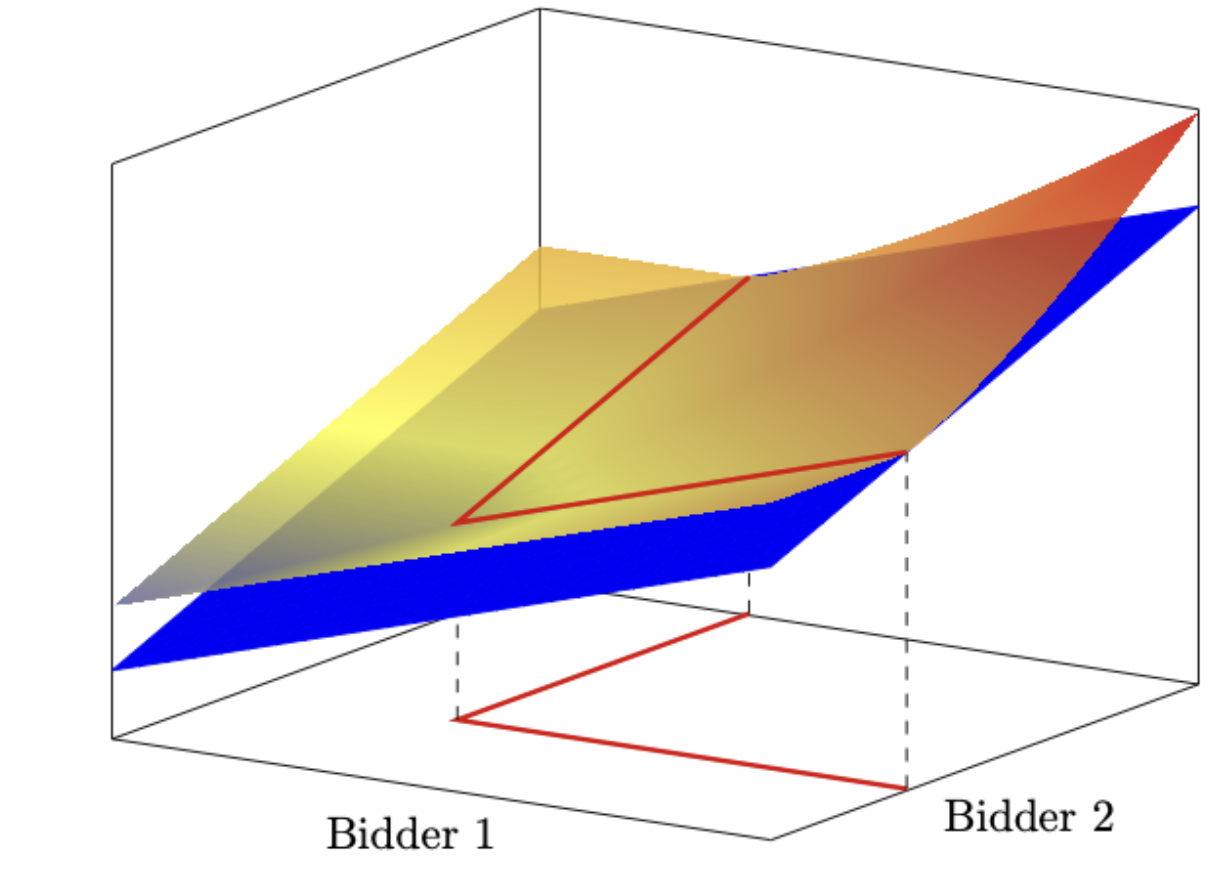

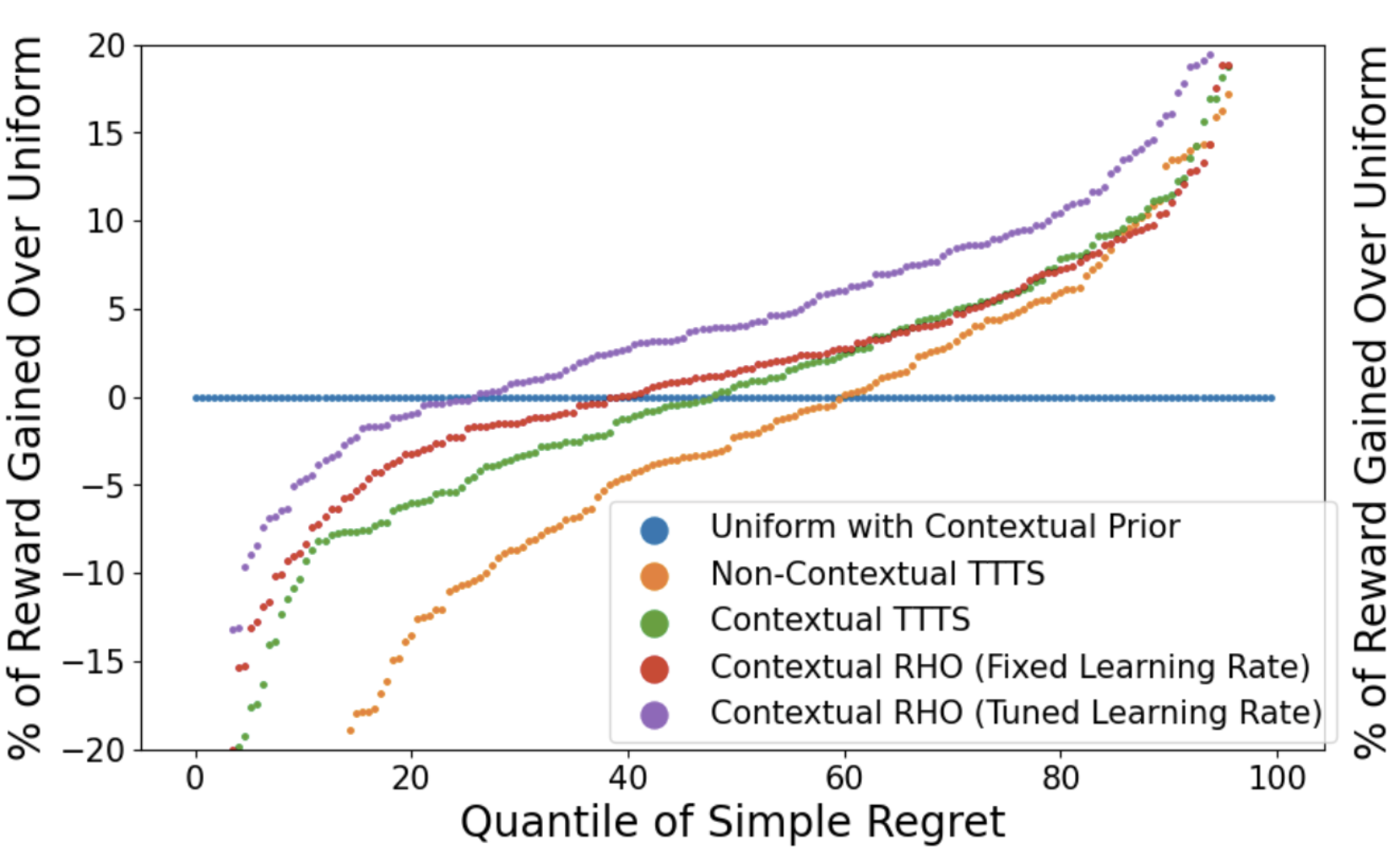

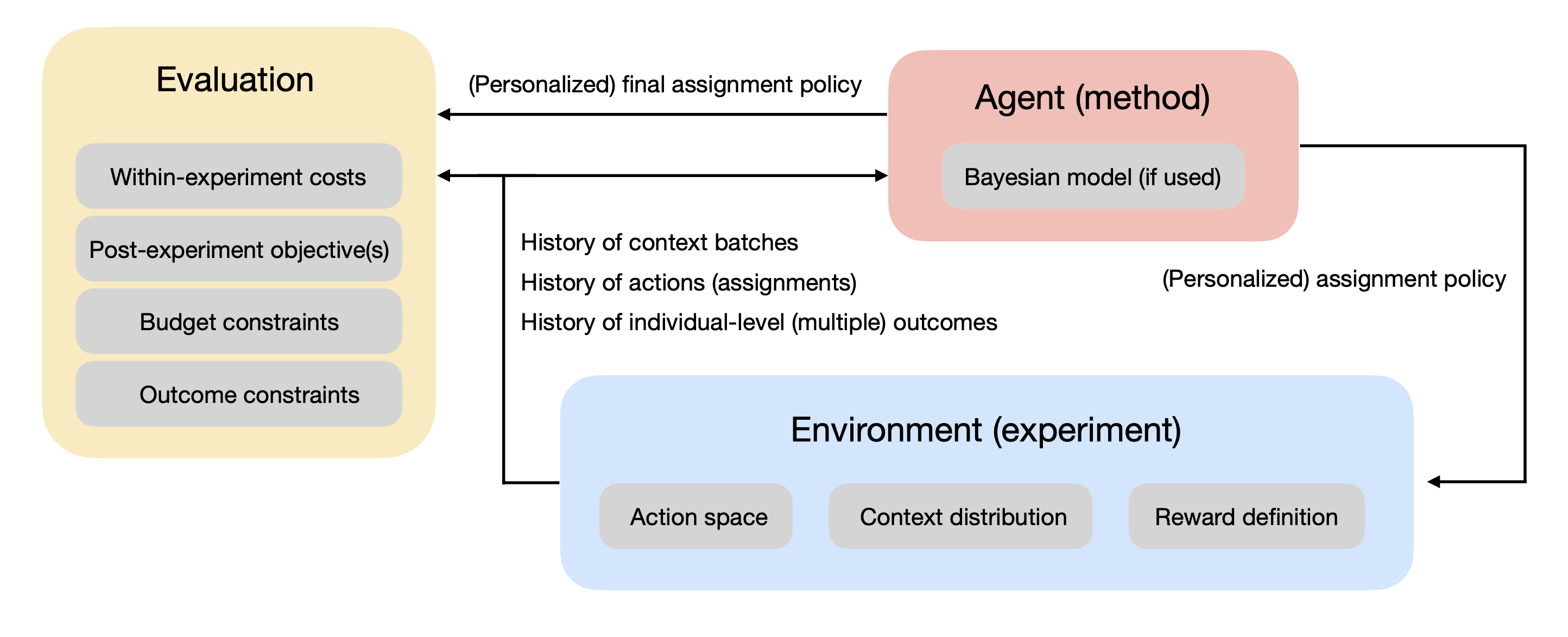

Mathematical Programming for Adaptive Experiments |

|

Adaptive Experimentation at Scale: A Computational Framework for Flexible Batches |

|

Stochastic Gradient Descent with Adaptive Data Ethan Che, Jing Dong, and Xin T. Tong |

|

AExGym: Benchmarks and Environments for Adaptive Experimentation |